Keeping Tests Valuable: Avoid False Negatives!

Preventing false negatives in unit tests is crucial to ensure the reliability and effectiveness of applications.

Continuing our series, in the last post we talked about feature regressions and the important role of unit tests in combating these regressions. The topic still continues about creating tests that avoid regressions, but let's focus on a great danger that we must always be aware of and avoid, tests that generate false negatives.

We will understand what false negatives are, the harm they bring to software reliability and quality, how to avoid them and tips on how to create tests that avoid false negatives. Let's start.

📍Watch out for false negatives!

False Negative means that a test case passes while the software contains the bug that the test was intended to capture.

A false negative indicates that there is no bug when there is one.

Ok, but how does this happen? Let me try to demonstrate an example with C#. Note that it is a didactic example to try to illustrate how we can identify a false negative. We have in the example below a class responsible for checking if a user can access a specific resource based on their age, country, and premium subscription. Let's also assume that the developer applied a refactoring of the code below:

Before showing the refactoring, note that the developer didn't pay attention and ended up introducing the wrong logical operator:

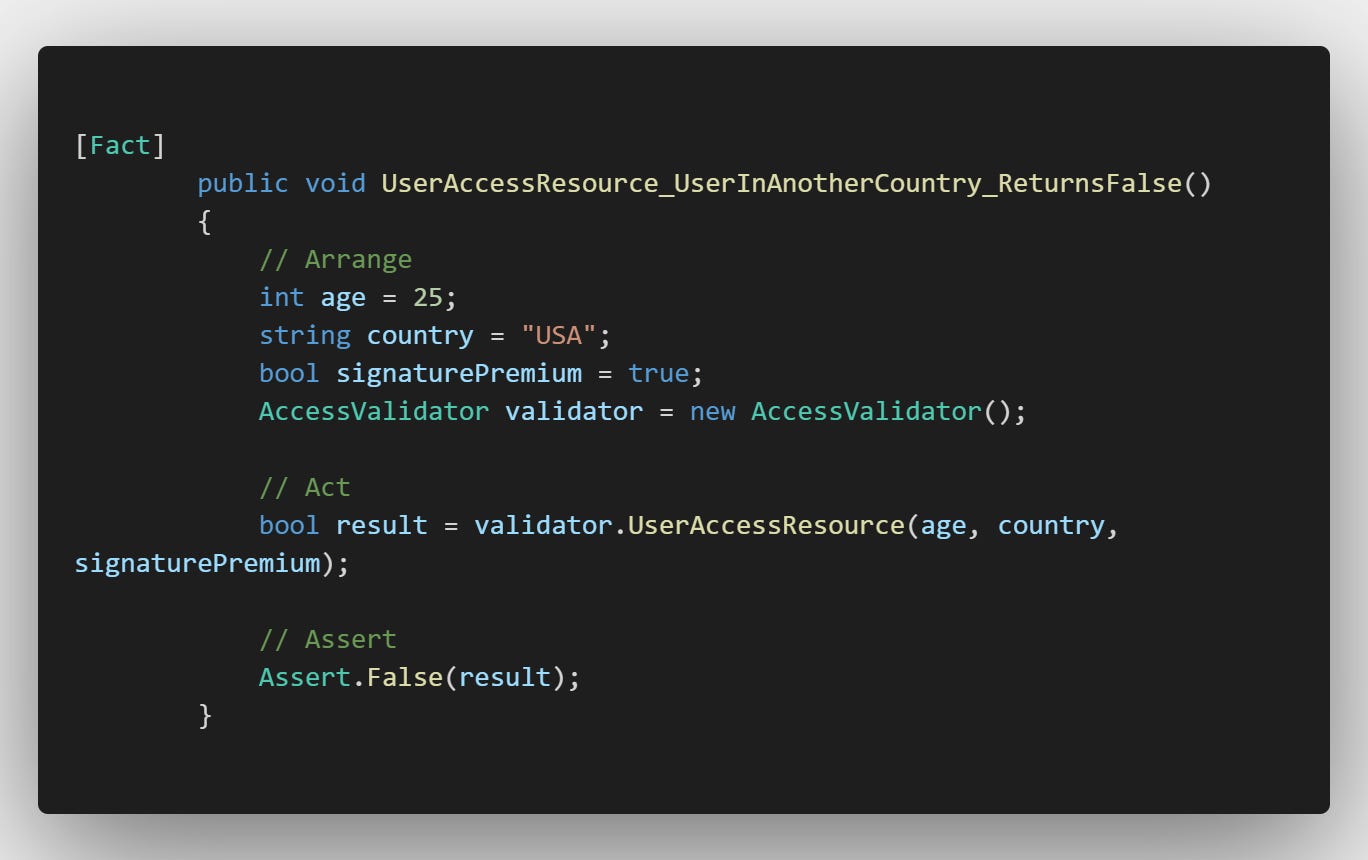

Now check out the test that was written without planning and with low attention:

In this example, the test checks whether a user who is 19 years old, from Brazil and without a premium subscription can access the resource. The test will pass because the condition will be true:

age >= AgeMinimum && country == "Brazil" || hasPremiumSubscriptionThe logical operator "OR" ( || ) used is not adequate for this case, it is used to combine two boolean expressions and returns true if at least one of the expressions is true, that is, it is incorrect to use this operator in the return for this context.

But the real requirement is that the user must be of minimum age, live in Brazil and have a premium subscription (all criteria must be true) to access the feature. In this case, the test does not reveal the problem in the code and gives a false negative.

The requirement may have been understood in the right way (or maybe not), but for some reason, the developer used the wrong logical operator. It could also be that the developer was not focused enough or was in a hurry to deliver the task and therefore wrote this meaningless unit test. This demonstrates an important point about how we should think and write many different scenarios in tests. If the developer and the team feel that this test is enough, or even not doing a code review, a bug will be passed on.

What would help to detect this false negative? Writing other test cases! So we cannot be satisfied with just outputs as true, we must also have outputs that consider an expected result as false, see the example below:

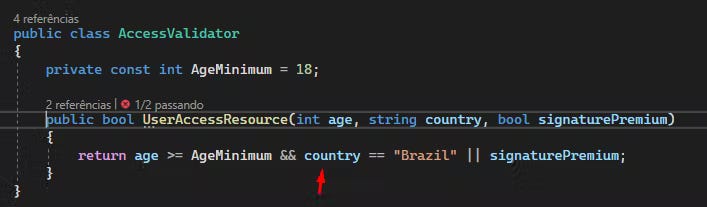

This test was written to pass (turning green ✅) as we are expecting the return as false, after all the input USA is not valid according to the requirements.

But we know the unit test will turn red and it won't pass (🛑). So now we're considering other inputs and other test cases and that brings more confidence to our test suite:

According to the current implementation of the AccessValidator class, after the wrong refactoring, the expression age >= AgeMinimum && country == "Brazil" || signaturePremium returns true if the user has a premium subscription, even if he is in a country other than Brazil.

But the new test UserAccessResource_UserInAnotherCountry_ReturnsFalse() we just wrote with "USA" as the input country, causes the UserAccessResource() method to return true even if the user does not meet all the criteria. After all, the logic is wrong.

This way the test will fail 🛑, as the method is expected to return false in this situation, we declare an Assert.False, which will get true as the result. So with this test, we will be able to capture the regression in functionality!

But what is the relation between regression and tests that generate false negatives? The relationship is in the ability of tests to detect problems introduced by new changes in the code. If automated tests fail to identify a regression of functionality because they always generate false negatives, this can lead to an increase in the number of bugs and problems in the software. Besides, this does not create a trust for anyone! Is it not clear? We can make another analogy. Please be patient with me 😂.

Let's separate the analogy into two steps.

Imagine that you are the coach of the team you have been supporting since childhood. Your team is performing well and all the positions work, your players get along and have good harmony. Then, to give a chance to other reserve players, you decide to make a change in the tactical scheme to make the team more in tune, adding a new player and adjusting the position of some others. The regression of functionality would be as if, after this tactical and player change, a part of your team starts to play worse than before, it could be the midfield or even the attack. Maybe the players are not getting along, or the new player is not fitting well into the tactical scheme. It seems that there has been a regression in the performance of your team, we can say that it is equivalent to the regression of functionality in software development.

But what about the false negatives? Still using the soccer team example, think that you conduct specific training to test the effectiveness of your new tactical scheme. During training, everything seems to be working perfectly. However, when it comes time for the official game, your team does not perform as well as it did in training, and gaps appear. It seems that the training sessions failed to detect these flaws, that is, there were false negatives.

Just as the coach must identify flaws in the team's performance and correct the strategies, we as software engineers must ensure that testing is effective in identifying feature regressions.

If testing fails to detect these regressions because there are always false negatives, the quality of the software will suffer. False negatives allow functionality regressions to go undetected since unit tests are not capturing the problems present in the code.

This can lead to feature regressions not being identified and fixed at development time, creating problems for end users, which can lead to lower software quality and reliability.

Okay, you've said enough about the problems, what about the solution? I can list some tips, which I hope will be helpful:

Plan and write comprehensive and efficient tests, ensuring that critical functionality and boundary cases are tested. Stop writing tests in a hurry, stop writing tests without planning, it does not benefit you.

Update tests as the software evolves and its complexity increases so that they reflect changes in the code. Having tests that only cover small parts of behavior, but don't test the main features and critical functionality are practically useless.

Perform regression tests frequently, to ensure that new changes do not negatively affect the performance of the system.

Involve QA's when many questions arise. They are usually domain experts. They know many rules and are in constant contact with the customer.

Implement code review and continuous integration processes, to identify and fix bugs as early as possible. Code review is essential, where other devs can give suggestions and point out flaws, both in unit tests and in the implemented code itself.

Don't create tests that only expect positive cases or true returns as final results. Test that the behavior also fails and returns false when the rule is not fulfilled and does not conform to the requirements!

It is the role of all developers to collaborate to write tests that detect regressions! We all need to ensure that testing is effective and avoiding false negatives.

📍How do false negatives break customer or user trust in the software?

Let's tell a short story. Joana is a self-employed professional who uses an invoicing application called InvoicePro to manage her invoices and payments. Recently, the InvoicePro development team released an update with new features and interface improvements.

However, a false negative in one of the unit tests missed a regression in the tax calculation functionality. The test verified that the tax was calculated correctly, but did not detect a specific scenario where the calculation failed for custom tax rates.

After the upgrade, Joana creat

es a new invoice for a customer and enters a custom tax rate. Upon reviewing the invoice before submitting it, she notices that the tax calculation is incorrect. Joana tries to fix the problem, but cannot find a solution.

Worried about losing credibility with her client, she decides to calculate the tax manually and edit the invoice before sending it. This consumes valuable time and frustrates Joana, who relied on the app to handle these calculations automatically.

The negative experience makes Joana question the reliability of InvoicePro and consider looking for alternatives. This story illustrates how a false negative in unit tests can lead to software issues that directly affect the end user and undermine customer confidence in the product.

What lesson can we learn from this story? I will quickly list below 3 lessons that I see as essential:

The importance of effective unit tests: Unit tests that do not detect problems in the code, generating false negatives, can allow errors to go unnoticed and affect the functionality of the software. It is crucial to ensure that tests are well-written and cover all possible scenarios and use cases.

The impact of customer trust: When software has problems that directly affect the end user, customer trust in the product is undermined. Negative experiences can lead customers to look for alternative solutions and affect the product's reputation in the market.

The need for post-release monitoring and support: Even with extensive testing, it is important to monitor the behavior of the software in production and provide efficient support to users. Identifying and fixing issues quickly can help minimize negative impacts on user experience and product confidence.

We can conclude that false negatives in unit tests are serious, as they can cause several problems and negative impacts on development and user experience. It is crucial that software development teams strive to ensure that unit tests are effective and comprehensive, minimizing the possibility of undetected issues.

📍Do false negatives affect deadlines?

Based on everything we've read, we can say yes! Mainly because it is always necessary to dedicate time in the investigation of the problem, both for the code and also for the tests! There is no point in resolving functionality regression, but allowing the false negative to live on within your test suite. Stay alert! We can list the various aspects that lead the development team to miss deadlines because of false negatives in tests:

Workflow interruption: When a false negative is discovered, developers may need to stop the work they are currently engaged in to correct the problem. This shift in focus can be detrimental to productivity and overall project planning.

Rework: False negatives can result in rework as developers have to revisit code they thought was working correctly. This rework consumes valuable time and resources that could be used elsewhere in the project.

Late diagnosis of a problem: Since false negatives indicate that a test passed even when there is a problem in the code, developers may not realize a problem exists until it is too late. This can result in a delayed diagnosis, which can lead to more time being spent investigating and resolving the issue.

Increased repair cost: The more time that passes before a problem is identified, the greater the cost to fix it. This is because changes made to the code accumulate and can make the fix more complex. False negatives, therefore, can lead to an increase in the time and effort required to correct problems.

What we listed above, usually all of us have already faced! But do we need to continue this vicious cycle? No! We can write tests that convey confidence and fail only when necessary! But for that, everyone should be aware that it takes dedication to achieve a safe and effective set of tests!

📍Conclusion

In conclusion, false negatives in unit tests represent a significant problem in software development, as they can lead to the introduction of errors and quality issues in the final product. To meet this challenge, development teams must adopt sound testing practices, promote code reviews and pairing, and invest in training and awareness about the importance of effective unit testing.

In addition, it is essential to monitor the behavior of the software in production and provide efficient support to users, quickly identifying and correcting problems. This can help minimize the negative impact of tests that generate false negatives on user experience and product confidence.

Thank you so much for reading until the end, I hope the tips can help! Please like and share! Until the next article! 😄🖐️

Books that I consider essential for everyone ✅:

Effective Software Testing: A Developer's Guide - by Mauricio Aniche

Unit Testing Principles, Practices, and Patterns - by Vladimir Khorikov