Keeping Tests Valuable: Be Careful With Regressions!

The more effective the tests are, the more security they convey to us in the fight against regressions!

Writing tests is also a task that requires our attention to maintain the quality of the software! In this article, we will talk about regressions, understand their dangers and how they usually occur, how to identify possible regressions, present some examples, and comment on the benefits of writing tests that have these essential characteristics.

Let's start by better understanding what regression is in the context of software engineering.

📍 What is regression?

I always like, before diving into any theme, to understand the origins of the subject. I usually start by understanding the meaning of the word, which in this case is regression. The word comes from the Latin "regressus", which means "to return to a previous state or place". Further researching the word in English in the dictionary, I found some interesting references that I will list below:

a situation where things get worse instead of better.

Who has never been in a situation like this? Either in personal aspects of life or at work? Now look at another interesting description for the word regression:

a return to a previous and less advanced or worse state, condition, or behavior.

Usually, when a person takes his car that has some mechanical problem to be analyzed and repaired, we always hope that the problem will be solved and the car will return to the correct and better condition. We never want the state or condition of the car to return to a worse condition. The same can be said for software! First, we have to understand that a regression in software means that a feature stopped working as planned after some modification in the code, usually after implementing a new feature. And this is something very serious! Usually, regressions are feared by everyone, especially in large corporations where the impact of an important feature stopping working can generate losses of millions! And there are two types of regression: functional and non-functional. See the differences:

Functional: This is the most common form we see and occurs when a change in the code leads to a break in a functionality that is part of the existing business solution.

Non-functional: This is a less common form of regression, and occurs when a change in the code leads to a degradation in non-functional aspects of the software, such as performance, scalability, or security. For example, if a code change results in a significant increase in software latency, processing overhead, and so on.

These types of regressions can go undetected. Our focus here in this article is functional regressions. So let's talk about how unit testing can help detect regressions.

📍 What is the role of unit tests in helping to detect regressions?

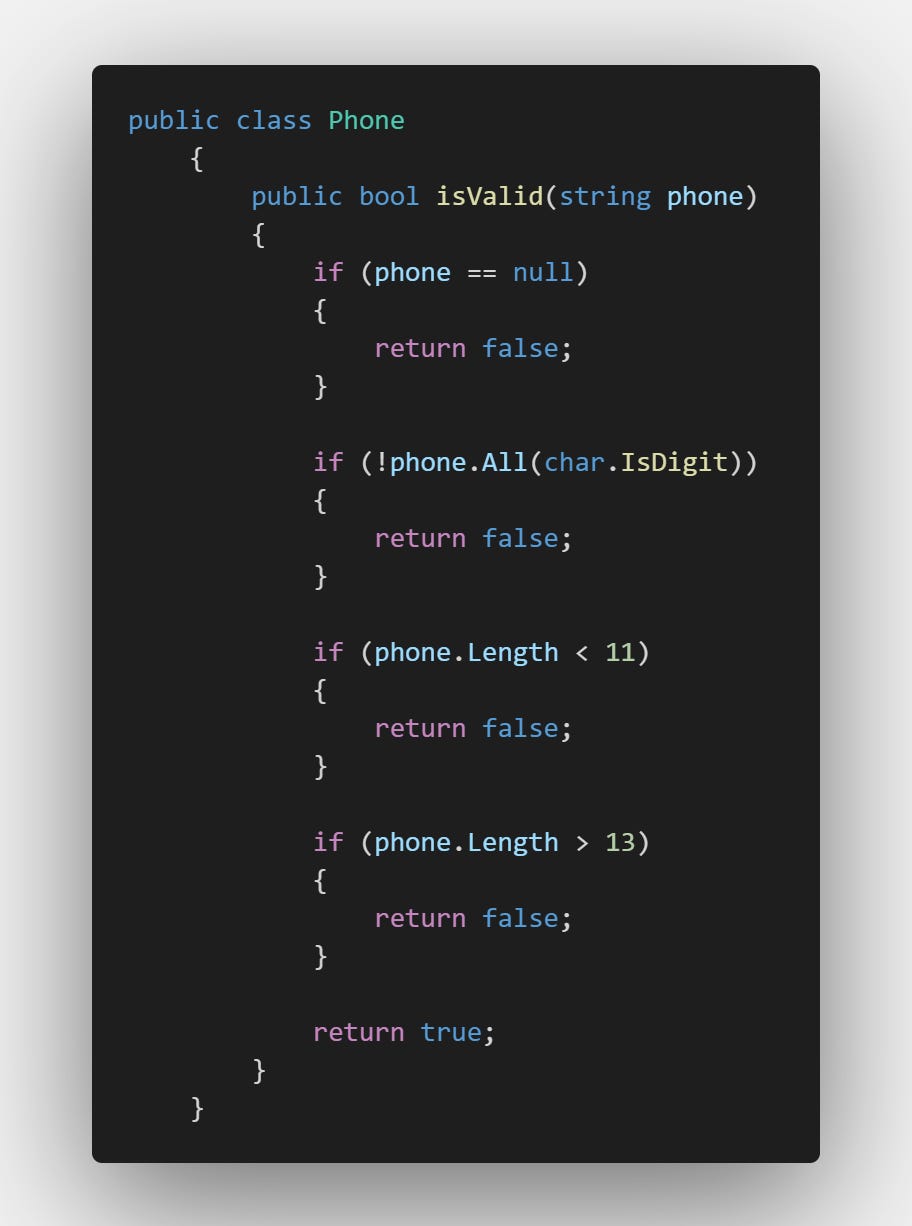

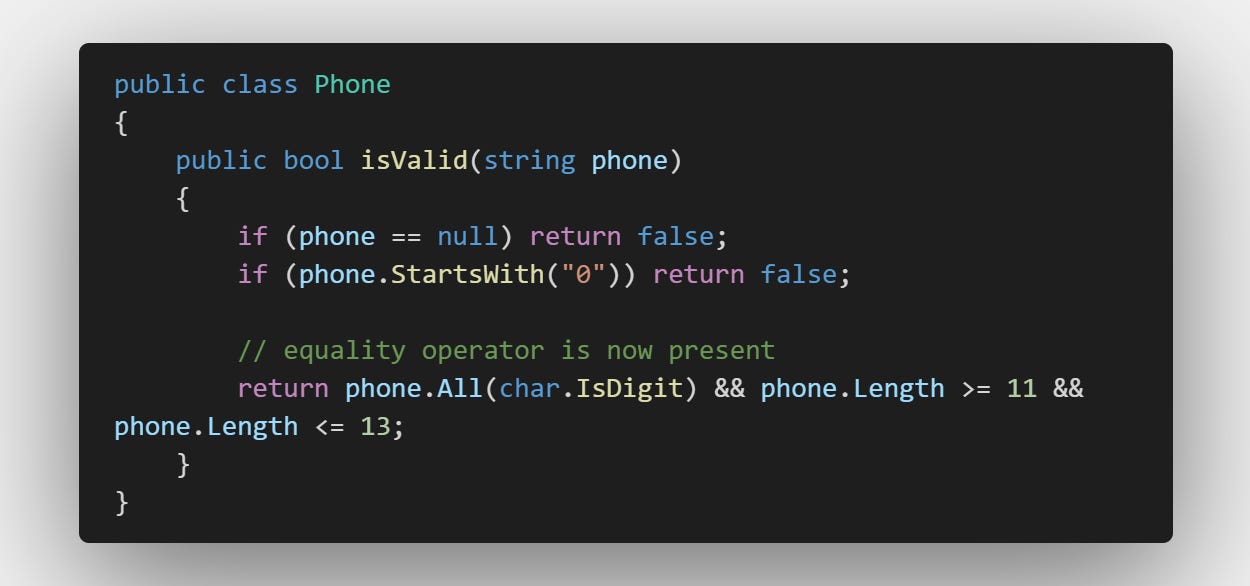

We often end up underestimating the role of unit tests. But they can help a lot, especially in avoiding regressions. Let's look at some examples, first in a didactic way. Take a look at the class below:

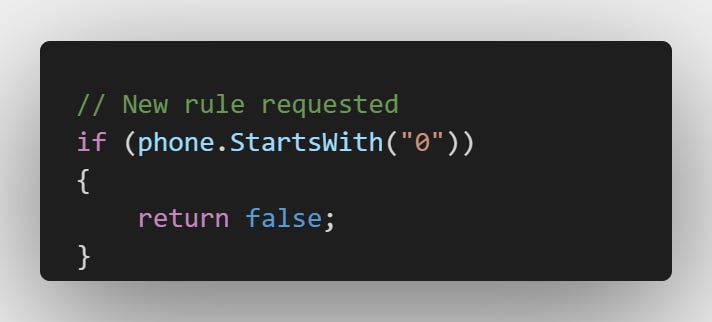

The class is quite simple and seeks to validate a phone with the criteria in the conditional structures, but we will add a new rule for the Phone class 👇:

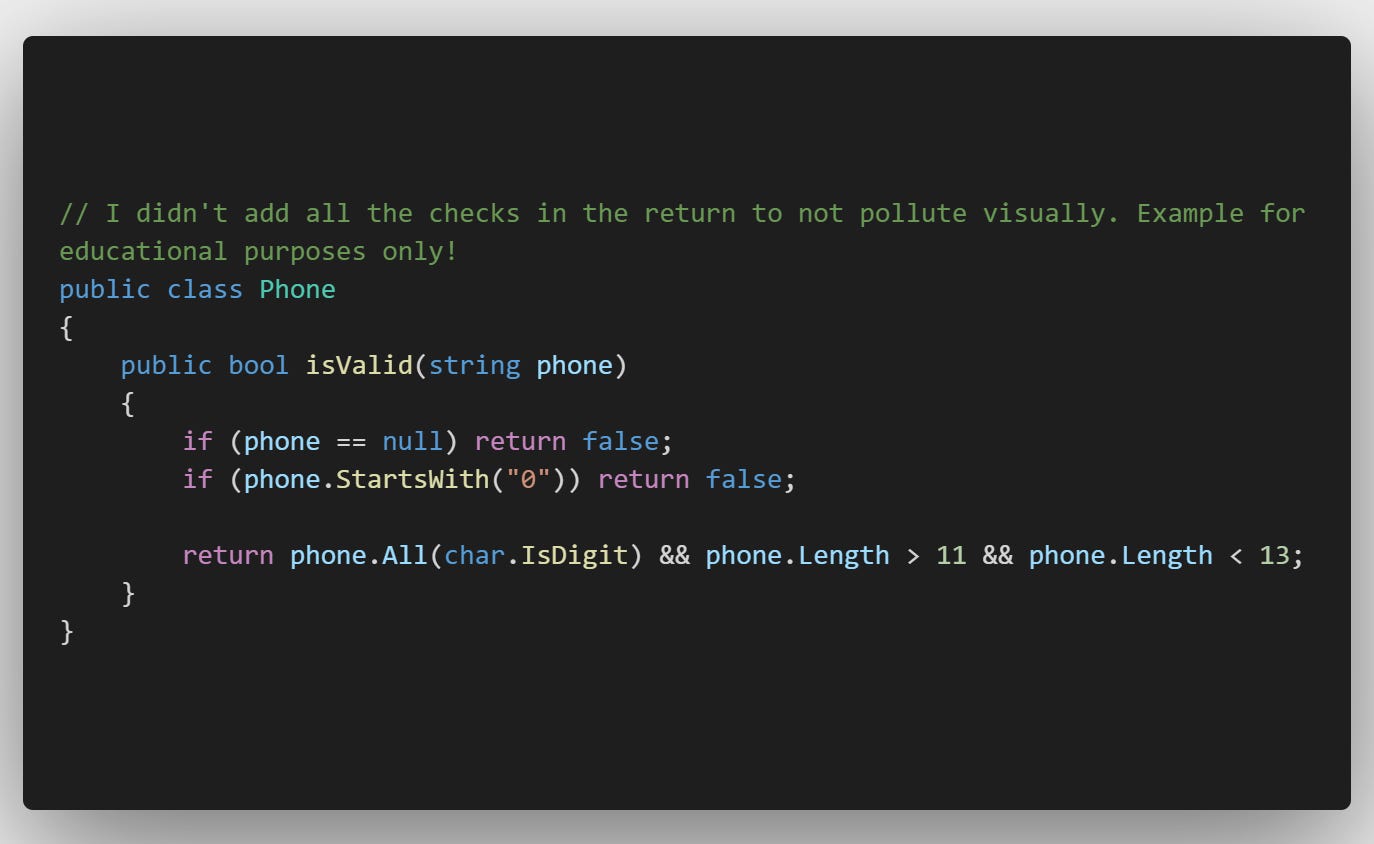

The developer then runs the tests and everything looks fine. Also, after the new check that the class performs, the developer implements a simple refactoring, see how it looks:

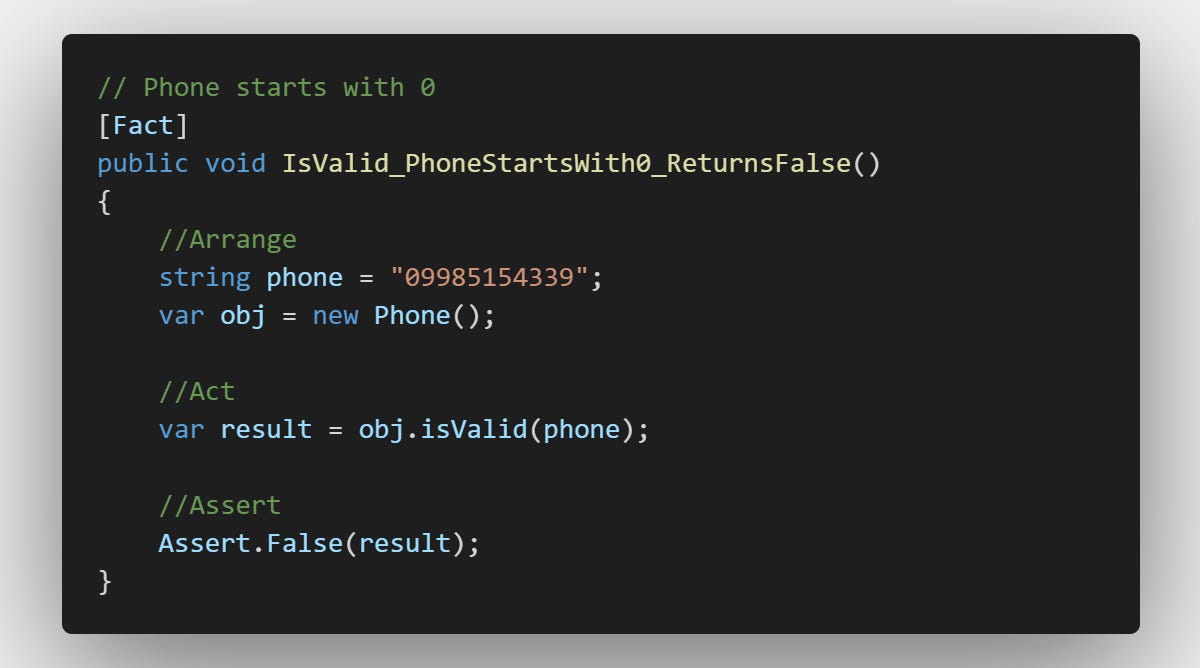

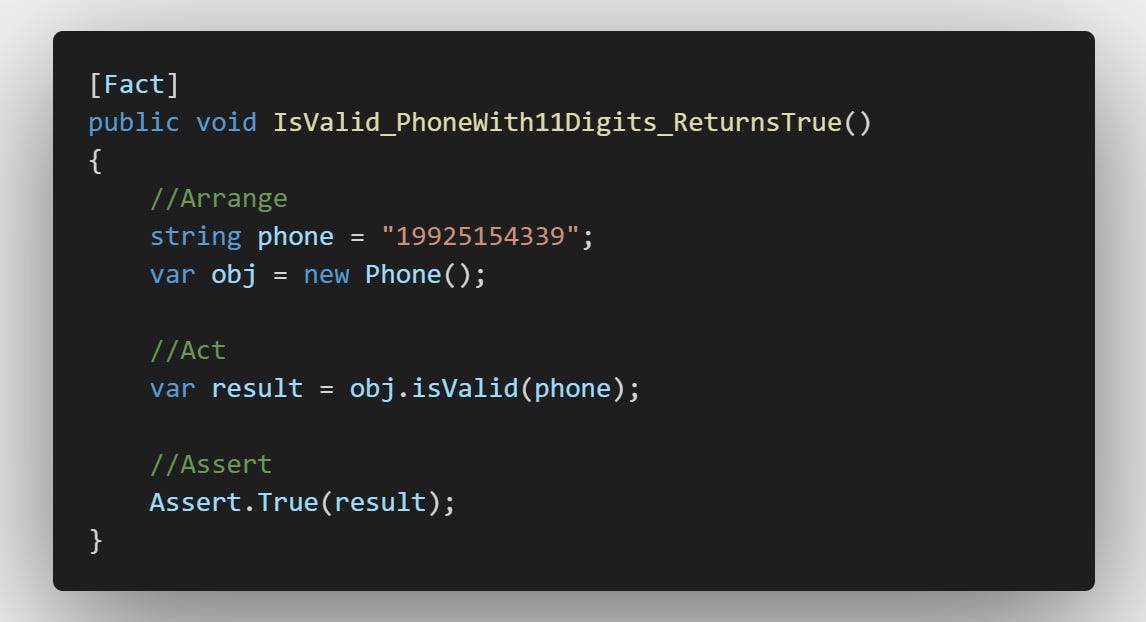

Now everything looks perfect, let's create a new test to verify that the new validation behaves as expected:

Naturally, is expected to run all tests or an automation tool does this, for example after a push to the repository. But a test that was already written by another developer fails, see the test that failed:

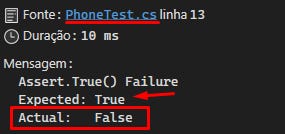

This was not what the developer expected, so he parses the failed test, sees below:

To find the problem the easiest and perhaps quickest way is to debug, but we can do a logical exercise here. Before refactoring, each conditional structure contained its own returns. But now we have a single conditional structure with the logical && (AND) operator that appears twice!

return phone.All(char.IsDigit) && phone.Length > 11 && phone.Length < 13;Notice one important point, if the string contains only numbers, is greater than 11 and less than 13 the return is true, but what if the string is equal to 11 and 13?

The previous behavior of the code, which is the expected behavior by the client and stated in the requirements, considered a string with a length of 11 and 13 characters to be valid. But not anymore! The behavior of the class has been broken. So what does this show us? First, the unit test prevented a functionality regression!

Second, if unit tests did not exist, you would need a manual test to be able to detect this regression. Do you understand more clearly the importance of unit testing in the fight against functionality regression? If the user's phone number happened to contain 12 characters, that would be fine, and the validation would be accepted. But any other user who had a phone number with 11 or 13 characters would not have a valid phone number for the system.

With unit tests, regressions are easy to detect, bringing confidence to the developers and the QA team. Now, after the test has failed the developer can reliably detect the problem and fix the regression:

The declared equality operator now allows a string with a length equal to 11 or 13 characters. You might be thinking, "Wouldn't this fit in as refactoring resistance?" No! In this case, the final behavior was changed, not because of the refactoring, but because of incorrect logic implemented during the refactoring process. After refactoring the observable behavior was changed because the developer did not try to make sure of the requirements. So it is clear that this is a case where the unit tests helped to detect a regression. Here is the definition of refactoring:

Refactoring means changing existing code without changing its observable behavior. The intention is to improve the non-functional characteristics of the code, such as making the code easier to read and decreasing the overall complexity of the algorithm.

Let's now understand what strategies we can adopt to improve the scope of our tests in regressions.

📍 How to increase the chances of tests revealing regressions?

This may be a deep subject, but it is important to comment on. After all, how can we increase the chances of tests revealing regressions of already existing functionality? Well, we can try to mention two strategies.

The first one that I consider essential is to identify components that are importants and are usually responsible for validating and executing rules that are critical to the system. Why? Usually, in these classes or components, a regression of functionality can mean incalculable damage to the business. In large applications that follow good practices and principles, business rules live in the domain. We can say that there are great validations and rules. One strategy to increase the chances of finding regressions is to focus on testing behaviors of these classes and components focusing on requirements. We must remember that the more code we have in a system, the more exposed we are to the risk of making changes that will regress the software to a state of unacceptable behavior, so it is important to ensure that these features are always protected with quality testing. The big problem? Usually, many developers do not have the privilege of working on systems that have an acceptable code design and architecture, and many validations are at various points in the application. I have experienced this myself. So how can we still use unit tests to avoid regressions in this scenario? A book that helped me and still helps is Martin Fowler's Refactoring Improving the Design of Existing Code, this book provides essential tips for refactoring methods, classes and components safely, and best of all, using tests!

The second strategy we can adopt is to analyze the complexity of the code. Why? When the complexity of the code increases, the chances of functionality regressions also increase. Sometimes the flow is easy to understand, but the coupling and methods with difficult and large names, make it difficult for the developer to add new functionality to an existing class, we can quickly cite other factors:

Interdependencies: In complex systems, components and code modules tend to be more interconnected and interdependent. Changes in one part of the system can cause unwanted and unexpected effects in other parts.

Difficult to understand: Complex code is more difficult to understand and analyze. This increases the likelihood that developers will introduce errors or modify parts of the code that affect existing functionality.

Code reuse: Complexity often leads to code reuse, which can be beneficial in terms of efficiency, but can also result in problems when changes in one component are propagated to other components that reuse it.

Another point to note is that the more complex the business logic becomes and with more flows, the chances of regressions also increase because it becomes more difficult for developers to understand and manage the interactions between different parts of the system. We must not forget that this also increases the probability of errors or misunderstandings occurring, leading to unintended changes in the behavior of the software. We can make a very brief analogy to make it easy to understand how critical this complexity factor is.

Think of a tangle of electrical wires, where each wire represents for us a part of the code. When the wires are well organized and identified, it is easy to trace and solve problems. However, as the tangle of wires increases in complexity, it becomes increasingly difficult to identify which wire is connected to which component. Consequently, a change in one wire is more likely to affect other components of the system in unintended ways, leading to regressions. To avoid regressions, what do electricians do? Electricians use a multimeter to check that there is a proper connection between two points in a circuit.

This type of test is known as continuity testing. This helps identify whether a wire is connected properly or if there is a break in the circuit. We can also point out that they try to be organized, keeping each wire as separate as possible, and they use labeling to identify different wires.

Just like electricians, we have unit tests to verify the behavior of the code, we don't need to put this tool aside. The electrician can identify faults in the electrical circuit after adding a new wire or component using testing techniques, and if we also create tests, we can avoid regressions! But it's good to stress that testing must be comprehensive and effective, only then can it help detect and correct regressions quickly, increasing software quality and reliability. And having unit tests makes regressions easier to understand because:

Focuses on expected behavior: Unit tests written with a focus on the expected behavior of the code, rather than its implementation, help identify deviations from the desired behavior, which can signal the presence of regression.

Isolation: Unit tests target specific pieces of code, isolating the functionality under test. This makes it easier to identify the root cause of regression since it is likely to be related to the code or component being tested.

Fast feedback: Unit tests provide fast feedback on the newly written behavior or new feature. When regression occurs, developers can identify it quickly and fix it before it becomes a bigger problem.

The goal of testing is to help the system grow healthily and quickly, bringing confidence and security to everyone involved. So always make a habit of creating quality tests, especially for business-critical functionality!

📍 Benefits of avoiding regressions

Let's quickly see the benefits that I consider important and that are visible quickly when we put into practice tests that avoid regressions:

Simplification of modifications and restructuring: With a wide range of unit tests, developers can carry out code changes and refactorings with greater confidence, knowing that the tests will notify them if any existing functionality is affected.

Reinforcement of product reliability: A comprehensive series of successful unit tests increases the credibility of the software, both for developers and stakeholders, by proving that the product works as intended.

Improved maintainability: Properly written and updated unit tests simplify the software maintenance process, as they help to quickly identify the consequences of code changes and ensure that functionality is not impaired.

Facilitation of integration: Quality unit tests facilitate the incorporation of new features or components, ensuring that modifications do not negatively impact pre-existing software.

I could list others, but the reading is already quite large, so I'll stop here! 😅

Conclusion

In conclusion, maintaining the value of testing is crucial to ensuring continuous improvement and stability of software applications. Regressions pose a significant threat to software quality as they can reintroduce previously resolved problems or create new ones. To keep the tests valuable and avoid regressions, it is essential to adopt practices such as full code reviews, regular testing, automated test suites and effective communication between team members and always try to create more comprehensive tests that look for bugs. Stay alert!

In the next post, we will talk about a subject that sometimes goes unnoticed, but that can also cause regressions and affect the tests, false negatives.

Thank you so much for reading until the end, I hope the tips can help! Please like and share! Until the next article! 😄🖐️